Retrieve Spark Event Logs

When you want to analyze the performance of your workloads, you’ll typically need to check the Spark Web UI to identify areas of improvement or just to detect events that are de-gradating the performance in your application. The Spark Web UI uses the Event Logs that are generated by each job running in your cluster to provide detailed information about Jobs, Stages and Tasks of your application that provides aggregated metrics that can help you to troubleshoot performance issues.

These files are extremely portable, as they can be collected across different engines or environments and stored in the same Spark History Server to have a single interface where you can review results of different benchmark results across different environment or cloud providers.

When using Amazon EMR, the Spark Event logs are enabled by default and are automatically stored on the HDFS of the cluster where the job was running under the HDFS path /var/log/spark/apps/

$ hdfs dfs -ls -R /var/log/spark/apps/

-rw-rw---- 1 hadoop spark 408384 2023-09-08 21:00 /var/log/spark/apps/application_1694206676971_0001

If you have Event Logs coming from a different environment or cluster, you can easily store them in this folder, and the Spark Web History Server will automatically pick them and you’ll be able to review the information of the job on the Spark History Server.

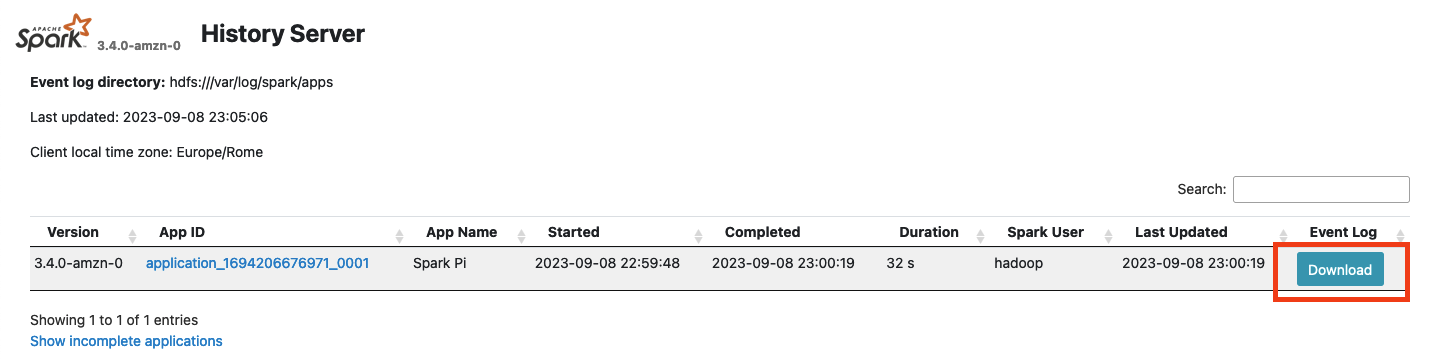

As alternative, if you want to export the Event Logs from a running cluster, you can also download them manually from the Spark Web History server from the main page as shown in the image below.

Finally, if you’re using on premise cluster or any third-party Spark environment, you can automatically enable the Spark Event logs using the following Spark configurations:

- spark.eventLog.enabled (Boolean) Determine if you want to enable or disable event logs collection. False by default

- spark.eventLog.dir (String) Location where to store the event logs. Can be an Object Store as Amazon S3, Azure Filesystem, or any path recognized by the Hadoop Filesystem API (e.g. HDFS, Local Filesystem, etc.)

Below an example to manually enable the Spark event logs in your Spark application.

spark-submit \

--name "Example App" \

--conf spark.eventLog.enabled=true \

--conf spark.eventLog.dir=hdfs:///tmp/spark \

...